Biostat M280 Homework 4¶

Due June 2 @ 11:59PM

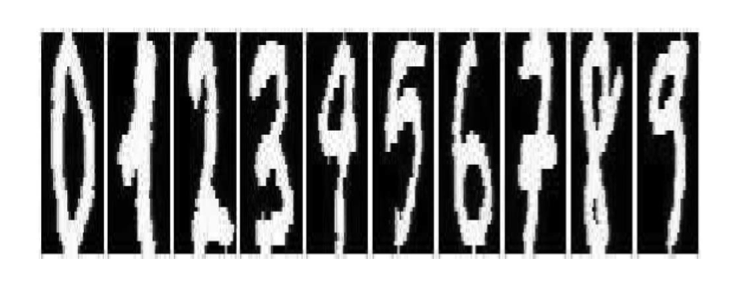

In this homework, we build a classifer for handwritten digit recognition. Following figure shows example bitmaps of handwritten digits from U.S. postal envelopes.

Each digit is represented by a $32 \times 32$ bitmap in which each element indicates one pixel with a value of white or black. Each $32 \times 32$ bitmap is divided into blocks of $4 \times 4$, and the number of white pixels are counted in each block. Therefore each handwritten digit is summarized by a vector $\mathbf{x} = (x_1, \ldots, x_{64})$ of length 64 where each element is a count between 0 and 16.

We will use a model-based method by assuming a distribution on the count vector and carry out classification using probabilities. A common distribution for count vectors is the multinomial distribution. However as you will see in Q10, it is not a good model for handwritten digits. Let's work on a more flexible model for count vectors. In the Dirichlet-multinomial model, we assume the multinomial probabilities $\mathbf{p} = (p_1,\ldots, p_d)$ follow a Dirichlet distribution with parameter vector $\alpha = (\alpha_1,\ldots, \alpha_d)$, $\alpha_j>0$, and density $$ \begin{eqnarray*} \pi(\mathbf{p}) = \frac{\Gamma(|\alpha|)}{\prod_{j=1}^d \Gamma(\alpha_j)} \prod_{j=1}^d p_j^{\alpha_j-1}, \end{eqnarray*} $$ where $|\alpha|=\sum_{j=1}^d \alpha_j$.

Q1¶

For a multivariate count vector $\mathbf{x}=(x_1,\ldots,x_d)$ with batch size $|\mathbf{x}|=\sum_{j=1}^d x_j$, show that the probability mass function for Dirichlet-multinomial distribution is $$ f(\mathbf{x} \mid \alpha) = \int_{\Delta_d} \binom{|\mathbf{x}|}{\mathbf{x}} \prod_{j=1}^d p_j^{x_j} \pi(\mathbf{p}) \, d \mathbf{p} = \binom{|\mathbf{x}|}{\mathbf{x}} \frac{\prod_{j=1}^d \Gamma(\alpha_j+x_j)}{\prod_{j=1}^d \Gamma(\alpha_j)} \frac{\Gamma(|\alpha|)}{\Gamma(|\alpha|+|\mathbf{x}|)} $$ where $\Delta_d$ is the unit simplex in $d$ dimensions and $|\alpha| = \sum_{j=1}^d \alpha_j$.

Q2¶

Given independent data points $\mathbf{x}_1, \ldots, \mathbf{x}_n$, show that the log-likelihood is $$ L(\alpha) = \sum_{i=1}^n \ln \binom{|\mathbf{x}_i|}{\mathbf{x}_i} + \sum_{i=1}^n \sum_{j=1}^d [\ln \Gamma(\alpha_j + x_{ij}) - \ln \Gamma(\alpha_j)] - \sum_{i=1}^n [\ln \Gamma(|\alpha|+|\mathbf{x}_i|) - \ln \Gamma(|\alpha|)]. $$ Is the log-likelihood a concave function?

Q3¶

Write Julia function to compute the log-density of the Dirichlet-multinomial distribution.

"""

dirmult_logpdf(x::Vector, α::Vector)

Compute the log-pdf of Dirichlet-multinomial distribution with parameter `α`

at data point `x`.

"""

function dirmult_logpdf(x::Vector, α::Vector)

# your code here

end

function dirmult_logpdf!(r::Vector, X::Matrix, α::Vector)

for j in 1:size(X, 2)

r[j] = dirmult_logpdf(X[:, j], α)

end

return r

end

"""

dirmult_logpdf(X, α)

Compute the log-pdf of Dirichlet-multinomial distribution with parameter `α`

at each data point in `X`. Each column of `X` is one data point.

"""

function dirmult_logpdf(X::Matrix, α::Vector)

r = zeros(size(X, 2))

dirmult_logpdf!(r, X, α)

end

Q4¶

Read in optdigits.tra, the training set of 3823 handwritten digits. Each row contains the 64 counts of a digit and the last element (65th element) indicates what digit it is. For grading purpose, evaluate the total log-likelihood of this data at parameter values $\alpha=(1,\ldots,1)$ using your function in Q3.

Q5¶

Derive the score function $\nabla L(\alpha)$, observed information matrix $-d^2L(\alpha)$, and Fisher information matrix $\mathbf{E}[-d^2L(\alpha)]$ for the Dirichlet-multinomial distribution.

Comment on why Fisher scoring method is inefficient for computing MLE in this example.

Q6¶

What structure does the observed information matrix possess that can facilitate the evaluation of the Newton direction? Is the observed information matrix always positive definite? What remedy can we take if it fails to be positive definite? (Hint: HW2 Q2.)

Q7¶

Discuss how to choose a good starting point. Implement this as the default starting value in your function below. (Hint: Method of moment estimator may furnish a good starting point.)

Q8¶

Write a function for finding MLE of Dirichlet-multinomial distribution given iid observations $\mathbf{x}_1,\ldots,\mathbf{x}_n$, using the Newton's method.

"""

dirmult_newton(X)

Find the MLE of Dirichlet-multinomial distribution using Newton's method.

# Argument

* `X`: an `n`-by-`d` matrix of counts; each column is one data point.

# Optional argument

* `alpha0`: a `d` vector of starting point (optional).

* `maxiters`: the maximum allowable Newton iterations (default 100).

* `tolfun`: the tolerance for relative change in objective values (default 1e-6).

# Output

* `maximum`: the log-likelihood at MLE.

* `estimate`: the MLE.

* `gradient`: the gradient at MLE.

* `hessian`: the Hessian at MLE.

* `se`: a `d` vector of standard errors.

* `iterations`: the number of iterations performed.

"""

function dirmult_newton(X::Matrix; α0::Vector = nothing,

maxiters::Int = 100, tolfun::Float64 = 1e-6)

# set default starting point from Q7

# Newton loop

for iter in 1:maxiters

# evaluate gradient (score)

# approximated observed information matrix

# compute Newton's direction

# line search loop

for lsiter in 1:10

# step halving

end

# check convergence criterion

if abs(logl - loglold) < tolfun * (abs(loglold) + 1)

break;

end

end

# compute logl, gradient, Hessian from final iterate

# output

end

Q9¶

Read in optdigits.tra, the training set of 3823 handwritten digits. Find the MLE for the subset of digit 0, digit 1, ..., and digit 9 separately using your function.

Q10¶

As $\alpha / |\alpha| \to \mathbf{p}$, the Dirichlet-multinomial distribution converges to a multinomial with parameter $\mathbf{p}$. Therefore multinomial can be considered as a special Dirichlet-multinomial with $|\alpha|=\infty$. Perform a likelihood ratio test (LRT) whether Dirichlet-multinomial offers a better fit than multinomial for digits 0, 1, ..., 9 respectively.

Q11¶

Now we can construct a simple Bayesian rule for handwritten digits recognition:

$$

\mathbf{x} \mapsto \arg \max_k \widehat \pi_k f(x|\widehat \alpha_k).

$$

Here we can use the proportion of digit $k$ in the training set as the prior probability $\widehat \pi_k$. Report the performance of your classifier on the test set of 1797 digits in optdigits.tes.