GPU Computing in Julia¶

This session introduces GPU computing in Julia.

versioninfo()

GPGPU¶

GPUs are ubiquitous in modern computers. Following are GPUs today's typical computer systems.

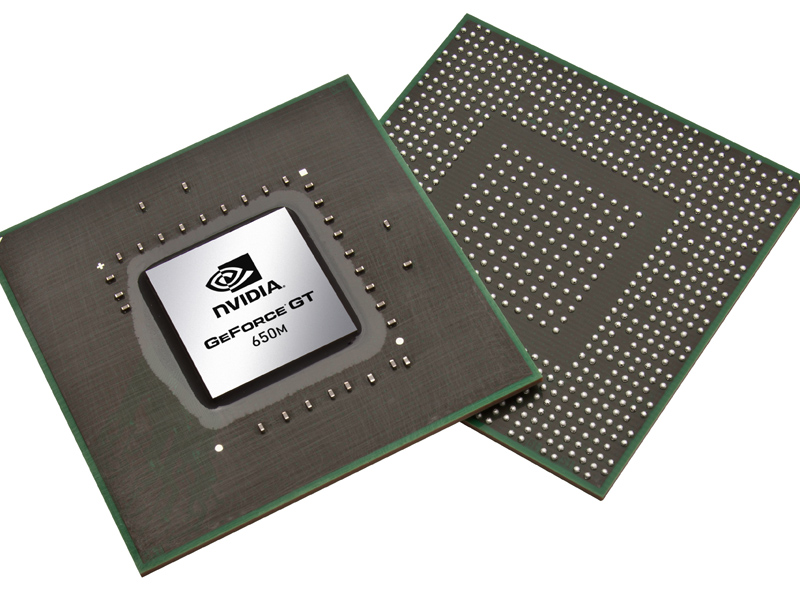

| NVIDIA GPUs | Tesla K80 | GTX 1080 | GT 650M |

|---|---|---|---|

|

|

|

|

| Computers | servers, cluster | desktop | laptop |

|

|

|

|

| Main usage | scientific computing | daily work, gaming | daily work |

| Memory | 24 GB | 8 GB | 1GB |

| Memory bandwidth | 480 GB/sec | 320 GB/sec | 80GB/sec |

| Number of cores | 4992 | 2560 | 384 |

| Processor clock | 562 MHz | 1.6 GHz | 0.9GHz |

| Peak DP performance | 2.91 TFLOPS | 257 GFLOPS | |

| Peak SP performance | 8.73 TFLOPS | 8228 GFLOPS | 691Gflops |

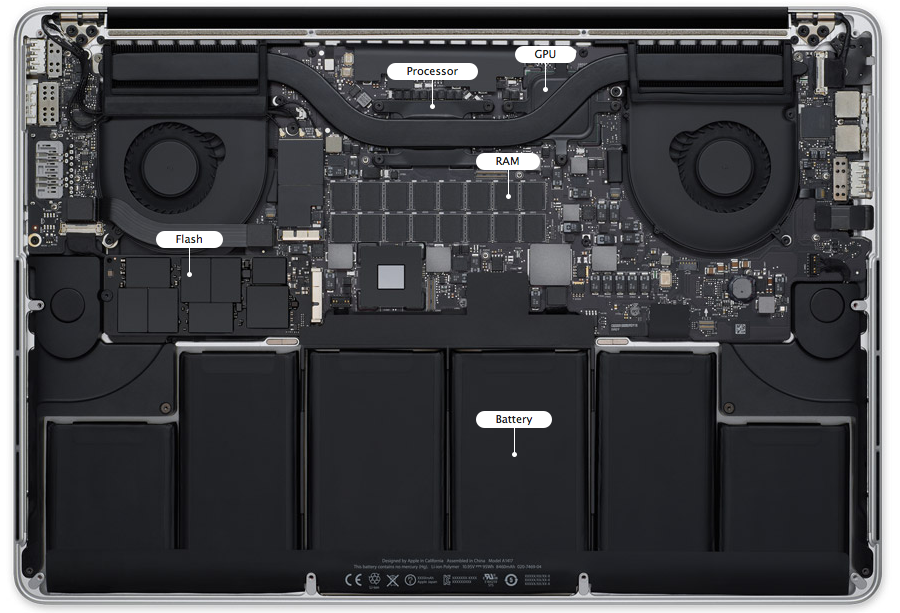

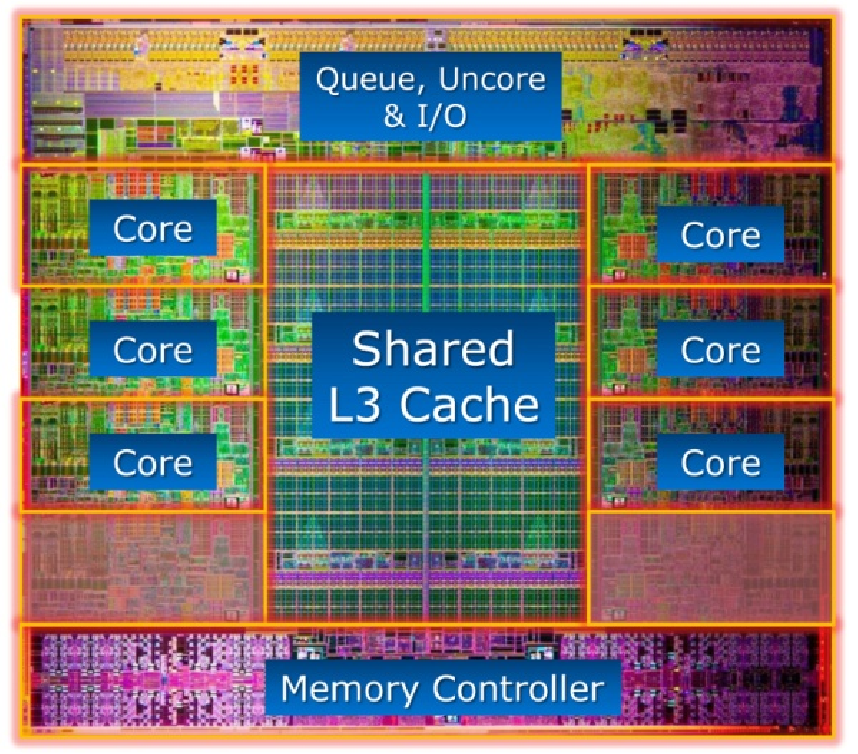

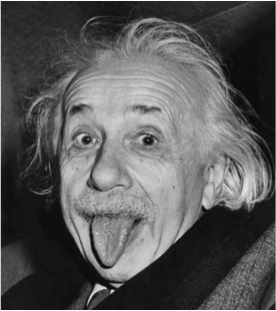

GPU architecture vs CPU architecture.

- GPUs contain 100s of processing cores on a single card; several cards can fit in a desktop PC

- Each core carries out the same operations in parallel on different input data -- single program, multiple data (SPMD) paradigm

- Extremely high arithmetic intensity if one can transfer the data onto and results off of the processors quickly

|

|

|---|---|

|

|

GPGPU in Julia¶

GPU support by Julia is under active development. Check JuliaGPU for currently available packages.

There are at least three paradigms to program GPU in Julia.

CUDA is an ecosystem exclusively for Nvidia GPUs. There are extensive CUDA libraries for scientific computing: CuBLAS, CuRAND, CuSparse, CuSolve, CuDNN, ...

The CuArray.jl package allows defining arrays on Nvidia GPUs and overloads many common operations. CuArrays.jl supports Julia v1.0+.

OpenCL is a standard supported multiple manufacturers (Nvidia, AMD, Intel, Apple, ...), but lacks some libraries essential for statistical computing.

The CLArray.jl package allows defining arrays on OpenCL devices and overloads many common operations. Currently CLArrays.jl only supports Julia v0.6.

ArrayFire is a high performance library that works on both CUDA or OpenCL framework.

The ArrayFire.jl package wraps the library for julia.

Warning: Most recent Apple operating system iOS 10.14 (Mojave) does not support CUDA yet.

Because my laptop has an AMD Radeon GPU, I'll illustrate using OpenCL on Julia v0.6.4.

Query GPU devices in the system¶

using CLArrays

# check available devices on this machine

CLArrays.devices()

# use the AMD Radeon Pro 460 GPU

dev = CLArrays.devices()[2]

CLArrays.init(dev)

Generate arrays on GPU devices¶

# generate GPU arrays

xd = rand(CLArray{Float32}, 5, 3)

yd = ones(CLArray{Float32}, 5, 3)

Transfer data between main memory and GPU¶

# transfer data from main memory to GPU

x = randn(5, 3)

xd = CLArray(x)

# transfer data from main memory to GPU

x = collect(xd)

Elementiwise operations¶

zd = log.(yd .+ sin.(xd))

# getting back x

asin.(exp.(zd) .- yd)

Linear algebra¶

zd = zeros(CLArray{Float32}, 3, 3)

At_mul_B!(zd, xd, yd)

using BenchmarkTools

n = 512

xd = rand(CLArray{Float32}, n, n)

yd = rand(CLArray{Float32}, n, n)

zd = zeros(CLArray{Float32}, n, n)

# SP matrix multiplication on GPU

@benchmark A_mul_B!($zd, $xd, $yd)

x = rand(Float32, n, n)

y = rand(Float32, n, n)

z = zeros(Float32, n, n)

# SP matrix multiplication on CPU

@benchmark A_mul_B!($z, $x, $y)

We ses ~50 fold speedup in this matrix multiplication example.